How does Live Translation work in the real world?

For in-person conversations – the most involved scenario – your iPhone and AirPods work together. Once you start Live Translation and put in your AirPods, the system handles the rest. As the other person talks, the microphones in your AirPods capture their speech, and within a moment, you hear a translated version in your ear. And your iPhone shows a transcription on the screen so you can follow along visually.When you reply, you just speak normally. Your iPhone and AirPods process your voice, translate it, and either play it aloud through your phone’s speaker or send it directly to the other person’s AirPods if they’re also using Live Translation.

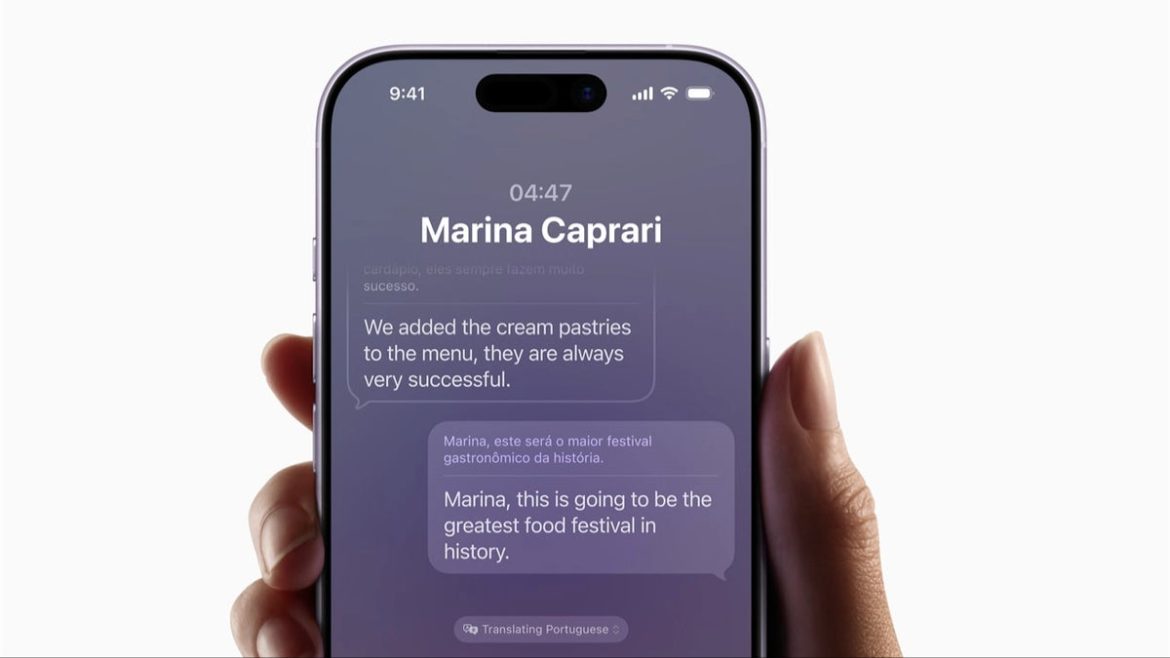

You can use Live Translation with the Phone app, FaceTime, Messages and more. | Image credit – Apple

In Messages, the setup is much simpler. After enabling the feature for a specific conversation, any text you receive in a foreign language is shown in your own language instantly. You just have to:

- Open a conversation in the Messages app.

- Tap the contact’s name at the top of the screen.

- Enable “Automatically Translate”.

- Choose your preferred languages from “Translate From”.

For standard phone calls, Live Translation works as a real-time interpreter. You turn it on during the call, and the translated audio is played back to you as the person speaks. In FaceTime, it’s handled through Live Captions, with translated subtitles appearing on the screen during the conversation.

What does Live Translation handle well?

Video credit – Apple

Because this entire system relies on Apple Intelligence, you can feel both the benefits and the unfinished parts. The biggest strength, in my opinion, is how naturally Live Translation sits inside Apple’s existing apps. You don’t need to switch tools or juggle a separate translator – it’s built directly into the places where you already communicate.

What still gets in the way?

The biggest limitation right now is the narrow language support. Since Apple has to build and optimize each translation model to run on the device’s Neural Engine, the number of supported languages is much smaller than what cloud-based tools offer – Google Translate being the obvious example with its huge catalog.

There’s also the challenge of dealing with more complicated or nuanced speech. Slang, very technical vocabulary, complex sentence structures, or strong regional accents can lead to literal translations that lose context or create small misunderstandings.

And during face-to-face use, the dual-audio effect can feel a bit crowded. Hearing the original speaker along with the translated voice at the same time can be hard to process if the person talks quickly or if the conversation moves fast.

Why does it matter?

Even with the limits, I see Live Translation as one of the more practical uses of mobile AI. I don’t care about AI tools that generate emojis or spit out some “creative” image. And I’m not relying on it for emails either – I still think we can type those ourselves.

But foreign languages? That’s where AI actually does something meaningful. You can’t learn every language on the planet, and when you’re traveling or chatting with someone who speaks something you don’t, this tool genuinely removes stress from the situation. It cuts out the guessing, the hand gestures, and the “let me Google this real quick” moments.

So yeah, it matters. It chips away at language barriers in a real, practical way. And even though it’s still locked to newer iPhones right now, we all know how this goes – give it a couple of years, and this will be something everyone can use.

#iPhones #Live #Translation #trips #stressful #heres